Unit testing is an invaluable component of any software testing strategy. However, in the embedded space, unit tests are frequently overlooked in favor of integration and hardware-in-the-loop testing. While these other testing strategies are important, unit testing is often the easiest place to begin testing, as you typically don't need real hardware to start verifying the correctness of your code.

Here at Blues we’ve recently invested a lot of time into unit testing and we want to share what we’ve learned with you. In this post, we’ll take a comprehensive look at how we unit test one of our core libraries, note-c. We’ll cover everything from simple testing of C code, to more advanced topics like mocking, test coverage and memory leak checking.

What is note-c?

note-c is our C library for communicating with a Blues Notecard. The Notecard combines prepaid cellular connectivity, low-power design, and secure "off-the-internet" communications in one small System-on-Module (SoM). It's the easiest way to connect your edge device to the cloud via cellular. note-c typically runs on a host microcontroller and supports communicating with the Notecard via an I2C or serial connection.

A Basic Test

Let’s walk through a couple example unit tests, starting with a simple one, JIsPresent_test.cpp. For the most part, we’ve written one test source file per library function, with the naming convention being <function name>_test.cpp. JIsPresent is a supplemental cJSON function we added that checks for the presence of a particular field in a JSON object. Here’s the function itself:

bool JIsPresent(J *rsp, const char *field)

{

if (rsp == NULL) {

return false;

}

return (JGetObjectItem(rsp, field) != NULL);

}Short and sweet. Before writing the test for a function, we consider all the paths through the function, and ask questions about what should happen in different scenarios:

- What’s supposed to happen if the

rspparameter is NULL? What iffieldis NULL? - What’s supposed to happen if

JGetObjectItemreturns NULL? What if it returns a non-NULL value?

These questions form the basis of the various sections in our test file. Here’s the test code, written using the Catch2 framework:

#ifdef NOTE_C_TEST

#include <catch2/catch_test_macros.hpp>

#include "n_lib.h"

namespace

{

TEST_CASE("JIsPresent")

{

NoteSetFnDefault(malloc, free, NULL, NULL);

const char field[] = "req";

J *json = JCreateObject();

JAddStringToObject(json, field, "note.add");

SECTION("NULL JSON") {

CHECK(!JIsPresent(NULL, ""));

}

SECTION("NULL field") {

CHECK(!JIsPresent(json, NULL));

}

SECTION("Field not present") {

CHECK(!JIsPresent(json, "cmd"));

}

SECTION("Field present") {

CHECK(JIsPresent(json, "req"));

}

JDelete(json);

}

}

#endif // NOTE_C_TESTWe use Catch2 sections to further divide a test file into individual unit tests. In fact, sections were a major factor in our decision to use Catch2 over other testing frameworks, like GoogleTest. Sections allow you to easily reuse common code between unit tests in a visually intuitive way. In this case, we have 4 sections that check that the right thing happens when:

- We pass NULL for the JSON object.

- We pass a valid JSON object but NULL for the field.

- We pass a valid JSON object and a valid field but the field isn’t present in the object.

- We pass a valid JSON object and a valid field and the field is present in the object.

A More Complex Test

JIsPresent is a very simple function. Let’s step up the complexity a tad and look at the function NoteNewRequest:

J *NoteNewRequest(const char *request)

{

J *reqdoc = JCreateObject();

if (reqdoc != NULL) {

JAddStringToObject(reqdoc, c_req, request);

}

return reqdoc;

}For this function, we’d like to make sure the right thing happens when JCreateObject returns NULL. To do that, we need a straightforward way of controlling the return value of JCreateObject. This is where mocking comes in. Since we’re focused just on the behavior of NoteNewRequest, we don’t care about how JCreateObject works internally. We’ll treat JCreateObject as a black box, and we’ll make sure that NoteNewRequest does the right thing when it returns both NULL or a valid JSON object.

We use the Fake Function Framework (fff) for mocking. The framework is implemented in a single header file, fff.h. Because of this, we can simply bundle the entire framework with the note-c source code, and a developer doesn’t need to install anything to get started with mocking.

Despite its small size, fff is a powerful framework. You can check the arguments that were passed to mock functions, how many times a mock function gets called, and swap out mocked functions for entirely new implementations, among other features. You can see many of these capabilities in action by browsing our unit tests. Here’s how we’re using fff to test NoteNewRequest:

#ifdef NOTE_C_TEST

#include <catch2/catch_test_macros.hpp>

#include "fff.h"

#include "n_lib.h"

DEFINE_FFF_GLOBALS

FAKE_VALUE_FUNC(J *, JCreateObject)

namespace

{

TEST_CASE("NoteNewRequest")

{

NoteSetFnDefault(malloc, free, NULL, NULL);

RESET_FAKE(JCreateObject);

const char req[] = "{ \"req\": \"card.info\" }";

SECTION("JCreateObject fails") {

JCreateObject_fake.return_val = NULL;

CHECK(!NoteNewRequest(req));

}

SECTION("JCreateObject succeeds") {

J* reqJson = (J *)NoteMalloc(sizeof(J));

REQUIRE(reqJson != NULL);

memset(reqJson, 0, sizeof(J));

reqJson->type = JObject;

JCreateObject_fake.return_val = reqJson;

CHECK(NoteNewRequest(req));

JDelete(reqJson);

}

}

}

#endif // NOTE_C_TESTTo get started, we include fff.h and use the DEFINE_FFF_GLOBALS macro. After that, we can specify the functions we want to mock. In this case we’re mocking JCreateObject, so we have FAKE_VALUE_FUNC(J *, JCreateObject). FAKE_VALUE_FUNC is used when mocking a function with a return value, while FAKE_VOID_FUNC is used when the function has a void return type. Inside the parentheses, we have a comma-separated set of parameters: the return type, the function name, and, if applicable, the types of the function’s parameters. NoteNewRequest takes no parameters, so the macro is done after we specify the function name.

Now we’re set up to manually control the return value of JCreateObject. In our first section, we check that NoteNewRequest behaves as intended when JCreateObject returns NULL. We do this by setting the return_val variable of JCreateObject_fake, the mock function struct, to NULL. Then, when NoteNewRequest calls JCreateObject, it’ll return NULL, and we can ensure that NoteNewRequest handles this scenario correctly.

Building and Running the Tests

We’ve shown how we test two functions from note-c, but how do we build and run them? To start, let’s examine the (abridged) note-c file hierarchy:

├── CMakeLists.txt

├── n_atof.c

├── n_b64.c

├── n_cjson.c

.

.

.

└── test

├── CMakeLists.txt

├── include

│ ├── fff.h

│ ├── i2c_mocks.h

│ ├── test_static.h

│ └── time_mocks.h

└── src

├── crcAdd_test.cpp

├── crcError_test.cpp

├── i2cNoteReset_test.cpp

├── i2cNoteTransaction_test.cpp

├── JAddBinaryToObject_test.cpp

├── JAllocString_test.cpp

.

.

.CMake drives our build process. The library source files live in the project root, and the root CMakeLists.txt controls how the library gets built. The test subdirectory contains an include and a src directory for test headers and source files, respectively. A CMakeLists.txt in the test subdirectory controls building the tests.

Before compiling any test code, we need to run cmake with -DNOTE_C_BUILD_TESTS=1. Without this flag, no unit tests will be built, as this defines the macro NOTE_C_TEST. As you can see in the example tests above, the unit test source files are ifdef-ed to nothing if this isn’t defined. This was mainly done as a workaround for Arduino. We use note-c in our Arduino library, note-arduino, and Arduino’s build system will recursively suck in all source files from note-c. That includes our unit tests, which we don’t want to include in the Arduino build.

Another effect of -DNOTE_C_BUILD_TESTS=1 is that our main CMakeLists.txt will call add_subdirectory(test/CMakeLists.txt). In that file, each test gets added to the build like this:

add_test(<function_name>)add_test is a macro. It creates an executable CMake target with the name function_name. It expects to find a file called <function_name>_test.cpp in test/src. It also handles linking the test against Catch2 and the note-c library, and sets up the appropriate include paths. For more detail, you can explore test/CMakeLists.txt on GitHub.

To run cmake with tests enabled, we run

cmake -B build/ -DNOTE_C_BUILD_TESTS=1We can then build the tests with

cmake --build build/ -- -jAfter building, all our test executables can be found under build/test. They can be run individually, but to run the entire test suite, we use CTest:

ctest --test-dir build/ --output-on-failureA successful test run will indicate a 100% pass rate and will tell us how long the tests took to run:

100% tests passed, 0 tests failed out of 87

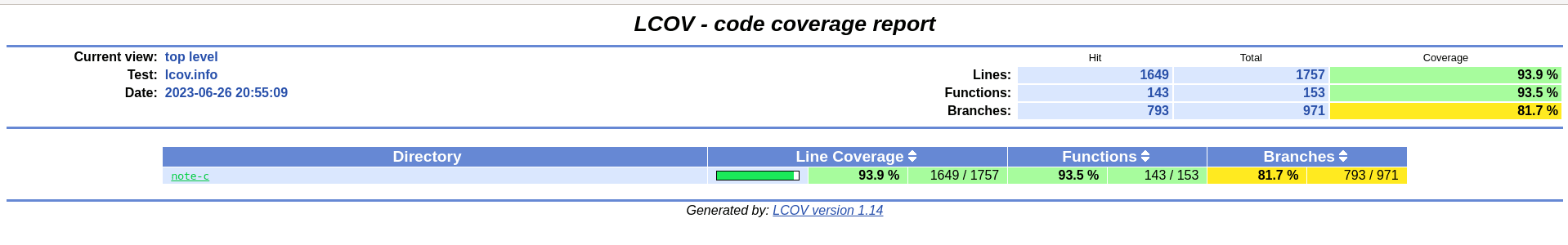

Total Test time (real) = 0.21 secCoverage

We produce and monitor test coverage data to make sure we're exercising and testing the vast majority of the code in note-c. To generate test coverage data, we use gcov. I won’t go line-by-line through the test/CMakeLists.txt coverage logic, but you can explore it yourself by looking for NOTE_C_COVERAGE in that file. To summarize what this flag does, it

- Links note-c against gcov and adds compiler flags to produce coverage data.

- Excludes third party code from coverage (e.g. CJSON code).

- Turns on branch coverage.

- Adds a custom Make target,

coverage, which builds and runs the test and produces an lcov output file so we can get a graphical view of test coverage.

To enable coverage, we run cmake with

cmake -B build/ -DNOTE_C_BUILD_TESTS=1 -DNOTE_C_COVERAGE=1And we build and run the tests with

cmake --build build/ -- coverage -jAt the end of the test run, we’ll get a summary of the coverage:

Summary coverage rate:

lines......: 93.9% (1649 of 1757 lines)

functions..: 93.5% (143 of 153 functions)

branches...: 81.7% (793 of 971 branches)If we want to dive into the coverage statistics for a particular source file or function, we can run this helpful Bash one-liner:

cd build/test/coverage && genhtml --branch-coverage lcov.info -o temp && cd tempIf we then use a web browser to open up the index.html file in the temp directory, we can navigate through the note-c source files and look at line-by-line coverage data:

For instance, if we click note-c and then open n_request.c, we can see the coverage for NoteNewRequest:

The blue highlighting indicates that the given line was executed at least once during the course of running the tests. The plus signs by the conditional indicate that both branches of this particular if statement were covered.

Checking for Memory Errors

Having a suite of unit tests for every library function makes it easy to also check those functions for memory errors and leaks. We can enable memory checking on each unit test with valgrind by adding the flag -DNOTE_C_MEM_CHECK=1 to our cmake command:

cmake -B build/ -DNOTE_C_BUILD_TESTS=1 -DNOTE_C_MEM_CHECK=1From here, we can invoke CTest’s built-in memcheck action:

ctest --test-dir build/ --output-on-failure -T memcheckWe make a few tweaks to how memory checking is done with this snippet in our main CMakeLists.txt:

if(NOTE_C_MEM_CHECK)

# Go ahead and make sure we can find valgrind while we're here.

find_program(VALGRIND valgrind REQUIRED)

message(STATUS "Found valgrind: ${VALGRIND}")

set(MEMORYCHECK_COMMAND_OPTIONS "--leak-check=full --error-exitcode=1")

endif(NOTE_C_MEM_CHECK)First, we make sure the valgrind program is installed. Then, we augment the base valgrind command by adding

--leak-check=full: This makes the testing take longer, but if a memory error is found, the error output will be more helpful.--error-exitcode=1: This is a convenience for scripting that makes it so any memory error causesvalgrindto exit with return code 1. If this isn’t specified,valgrindwill exit with the same return code as the underlying process its attached to.

Note that the tests run significantly slower when checking for memory errors. If valgrind catches a memory error in one of the tests, that test will fail, and that’ll cause the overall test run to fail.

Continuous Integration (CI)

We use GitHub Actions and Docker as the backbone of our CI strategy for note-c. Every pull request or push to the master branch triggers a run of the unit tests, which includes generating coverage data and checking for memory errors. In a future blog post, we’ll dive into the details of this CI pipeline.

Conclusion

That concludes this blog post on our note-c unit testing architecture. All components in this stack are open source, including note-c itself, so feel free to use our approach in any of your embedded C projects! We’re also always open for questions and feedback. Don’t hesitate to reach out on discuss.blues.com!