Webinar Summary

The following summary is auto-generated from the webinar recording.

AI is changing how embedded and IoT developers write firmware. From bare-metal C to Arduino sketches and Python on single-board computers, modern assistants can speed up repetitive tasks, suggest patterns, and generate working code. That said, using AI without structure often creates more work: hallucinations, fragile edits, and tangled code. This webinar provides practical, battle-tested ways to integrate AI into firmware workflows so you get faster, cleaner, and more reliable results.

Model Context Protocol and the Blues Expert MCP

An MCP, or Model Context Protocol, is a standard way for language models to call out to external tools, APIs, and data stores. Think of it as a universal adapter that lets LLMs query authoritative sources rather than guessing. That prevents hallucinations and gives AI access to live documentation and domain logic.

Blues Expert is an MCP that embeds Notecard documentation, SDK best practices, design patterns, and schema validation into the AI's toolset. When an LLM is unsure about something, it can call Blues Expert endpoints for:

- API validation against Notecard schemas

- Firmware entry points and SDK-specific best practices

- Document search of the developer docs via a RAG index

- Targeted best-practice suggestions for power management, templates, and message formatting

The result: LLM-generated code that follows real Notecard usage patterns and fewer moments where the assistant invents fake APIs.

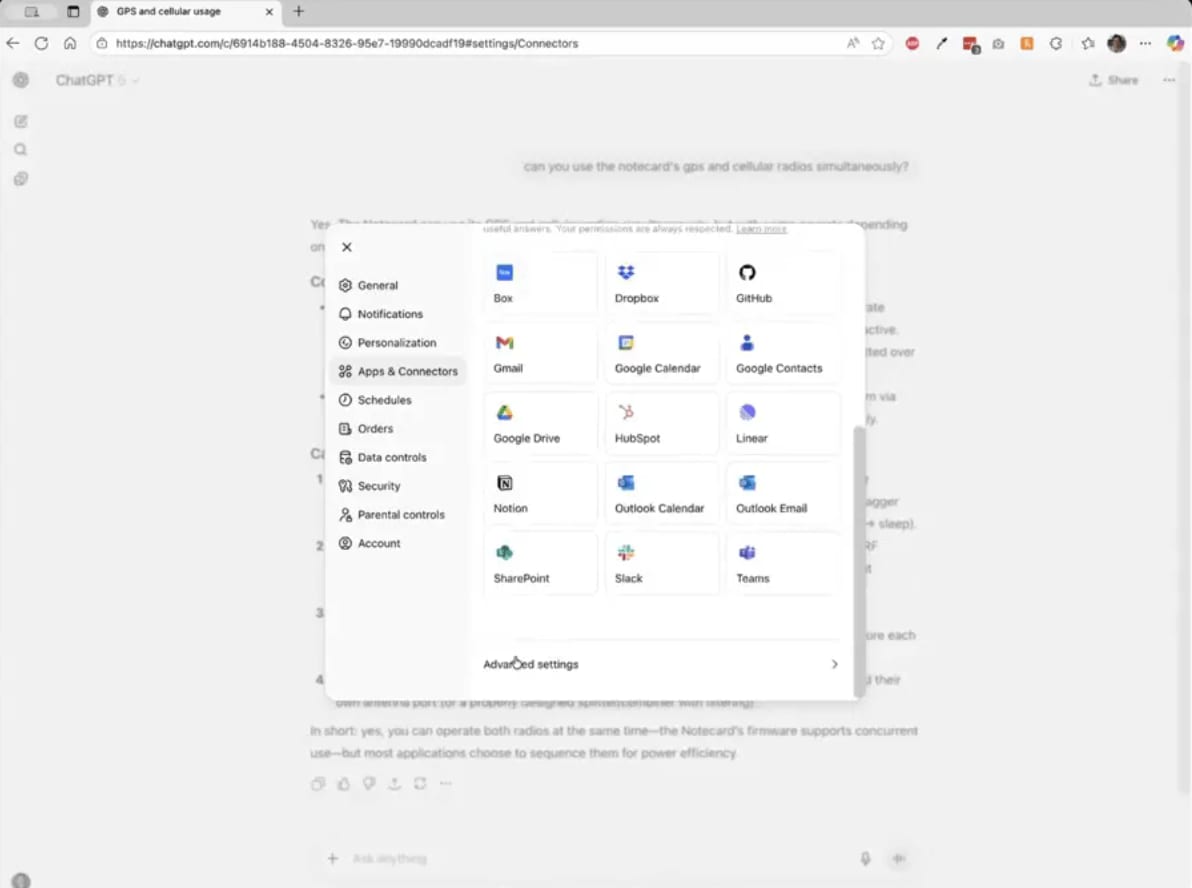

Many modern assistants support connectors or apps that let you register an MCP. Enabling a connector lets the assistant call the MCP and return validated, authoritative answers about device APIs and best practices. That often changes a generic answer into a correct, nuanced one.

Using Blues Expert MCP in ChatGPT

Which AI Tool to Use?

There are many AI coding tools and integrations. Choose a tool that suits your workflow and constraints:

- GitHub Copilot: integrated into VS Code, useful for inline completions and quick automation; free tier available for limited agent requests.

- Cursor: a fork of VS Code focused on agentic workflows; good for letting tools run commands and control the project.

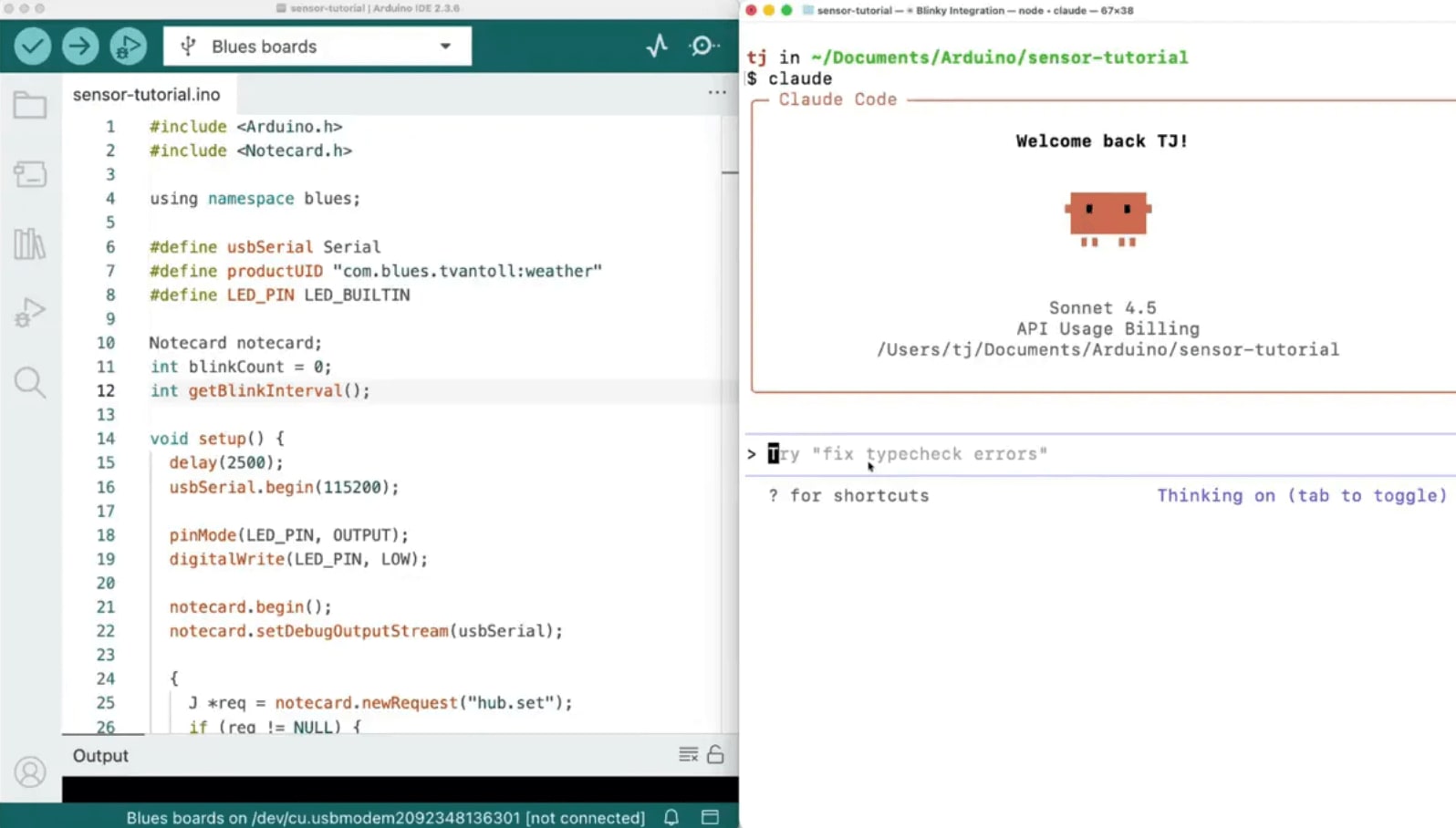

- Claude Code: CLI-first, terminal-based assistant. It excels at firmware work because it can run in any environment where vendor tools and command-line flows are needed.

For multi-platform firmware work, terminal-based assistants often beat editors that assume a single IDE. A CLI tool can run build commands, parse serial output, and edit files in-place across diverse toolchains.

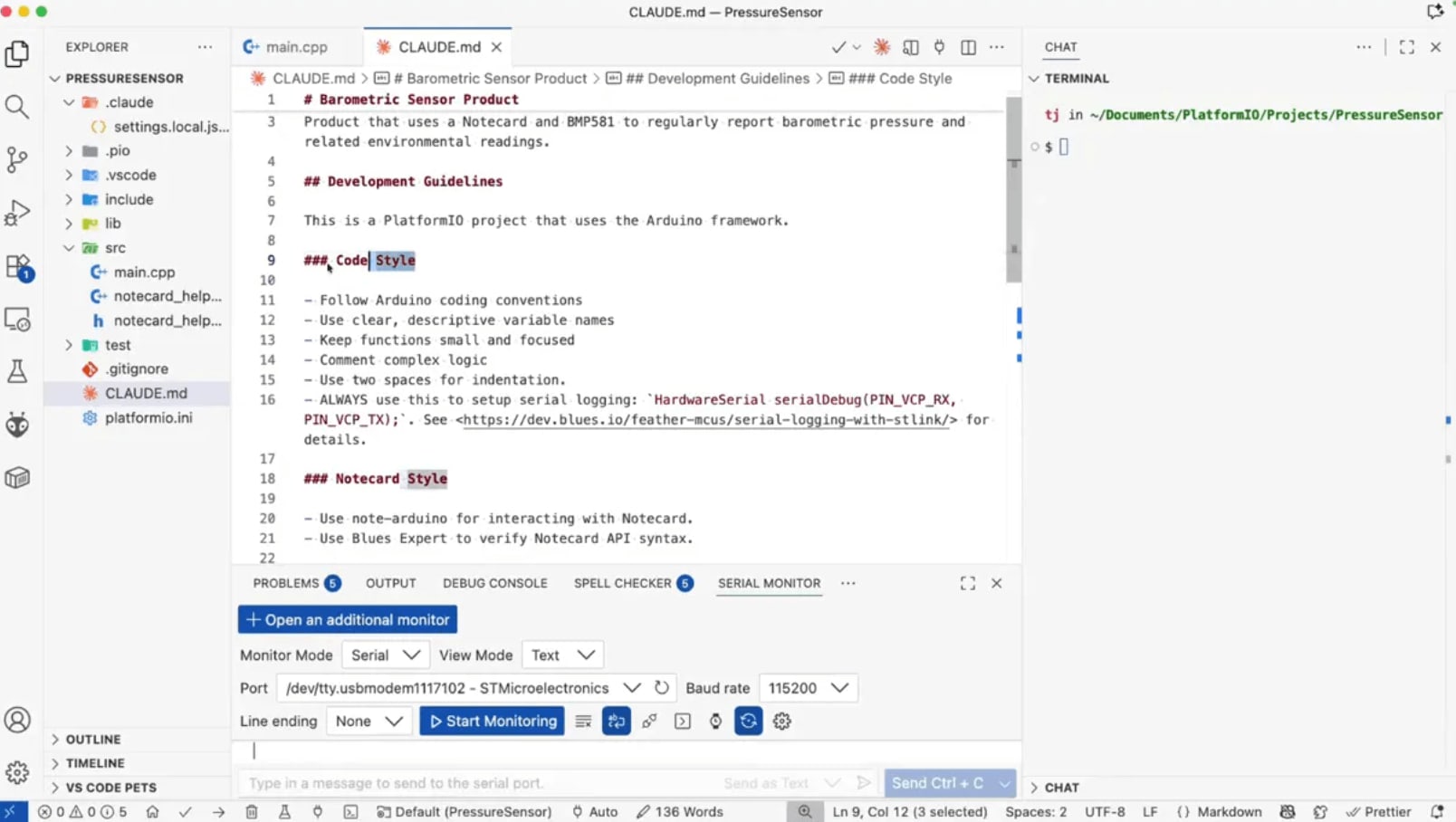

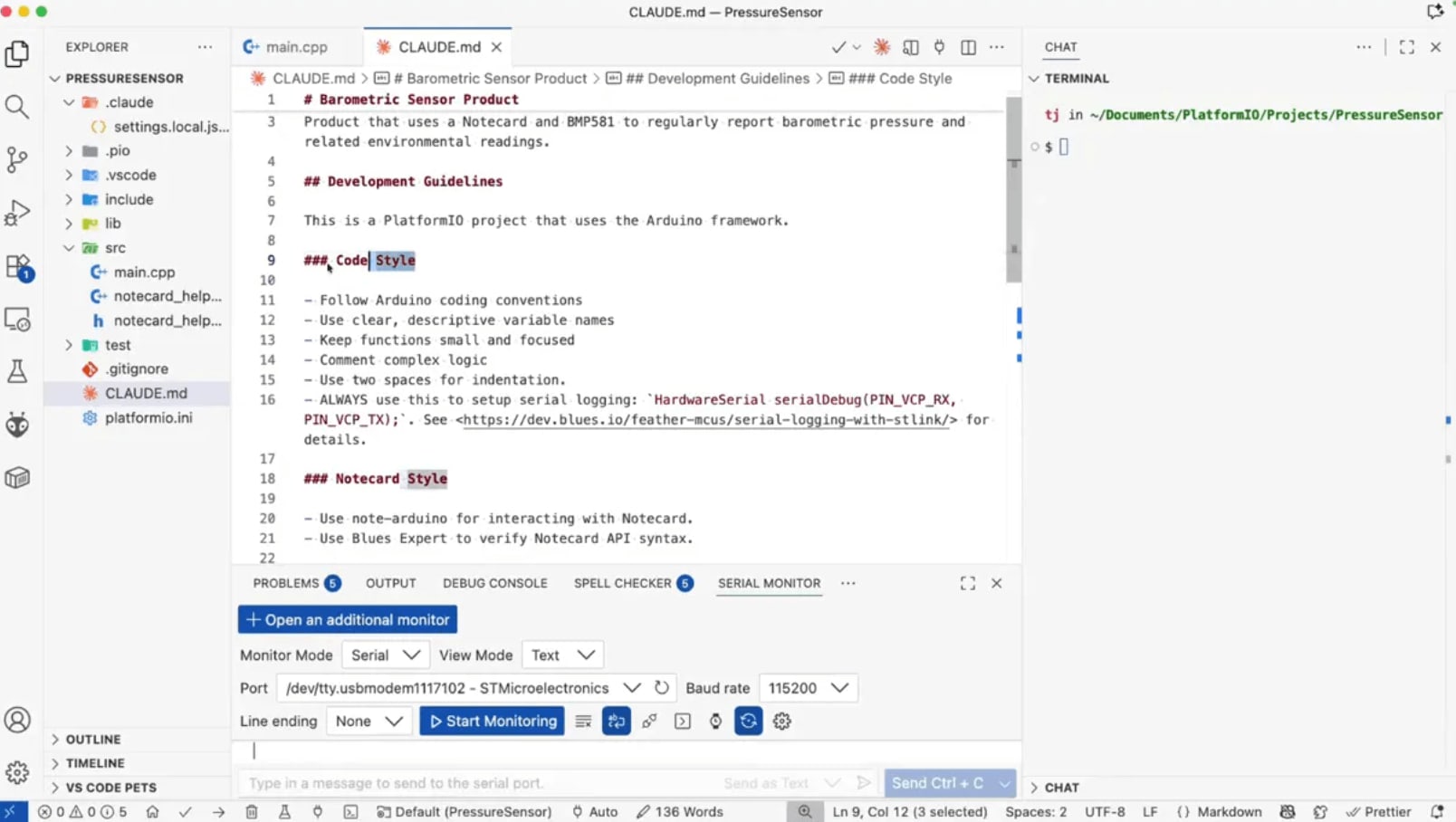

Provide Better Context for the AI

LLMs improve dramatically when you give them project context. Two practical ways

to do that. Use a project-level instruction file (example: cloud.md or

copilot-instructions.md). Use this file as a persistent system prompt that

encodes styles, libraries, hardware details, and operational constraints. You

can also install an MCP like Blues Expert so the assistant has built-in

access to Notecard APIs, templates, and validation tools.

Example of what to include in a project instruction file:

- Target MCU and toolchain (STM32 + PlatformIO)

- Sensors, screen drivers, and their libraries

- Preferred code style and indentation

- Power and connectivity expectations (how often to sync, template usage)

Concrete Claude Code Workflow

With a terminal-based assistant pointed at a project folder, you can ask things like "Add logging and documentation to the sensor module", and the assistant will crawl the repo, make edits, and request permission before committing. For larger tasks, it intelligently calls MCP tools to validate API requests and consult docs so it does not invent invalid calls.

Tools Inside Blues Expert MCP

When the assistant calls the Blues Expert MCP it can pick from a toolbox of endpoints. Key examples:

- API Validate: Check JSON requests against the Notecard API schema. Prevents the assistant from generating fake request types.

- API Docs: Return authoritative parameter details for a particular API call.

- DocSearch: Query a RAG index of the developer documentation so answers are grounded in live docs.

- Firmware Entry Point: Provide starter scaffolding and SDK-specific usage patterns.

- Firmware Best Practices: Focused guidance on power management, template usage, and bandwidth optimization.

Practical Use Cases and Workflows

Greenfield vs Brownfield

Greenfield projects let you specify your constraints up front and enforce project-wide instruction files. Brownfield projects require retrofitting connectivity without breaking existing code. The right MCP and prompting strategy let the assistant add a separate Notecard library and keep existing logic intact.

Retrofitting an Existing App

An effective prompt for retrofitting might be to tell the assistant to "use Blues Expert, add Notecard connectivity, and operate hands-free to add files rather than edit the host firmware."

The assistant should...

- Create a standalone Notecard library that wraps all Notecard interaction.

- Add templates for bandwidth-efficient data packaging.

- Insert retry logic for cold boot interactions so the MCU only talks to the Notecard when it is ready.

- Keep your main firmware file unchanged except for initialization hooks.

This approach keeps responsibilities separated and reduces the risk of accidental regressions in the original application.

Debugging with Serial Logs and Compiler Feedback

Give the assistant your serial logs or pipe them directly into the AI assistant. That creates a tight feedback loop: compile, capture output, feed logs back, and get targeted fixes. Likewise, pasting compilation errors into the conversation and asking for a fix often resolves both compile-time and runtime issues quickly.

OCR Pinouts and Datasheets

Drop screenshots of pinouts or datasheet PDFs into the assistant. Modern models can perform OCR on images and extract pin names and functions. Use that to ask for wiring diagrams, I2C bus suggestions, or maximum operating voltages from a datasheet without manual searching.

Building UIs with LVGL and AI

Creating polished display UIs is tedious and error-prone. LVGL offers powerful widgets but requires careful layout work. When integrating LVGL with sensors and Notecard:

- Be explicit in prompts about the exact display driver, resolution, and input device.

- Request concrete layout constraints such as margins, alignment, and axis labels to avoid overlapping widgets.

- Iterate quickly: deploy, collect runtime logs or screenshots, and feed those back so the assistant can fix refresh or redraw artifacts.

With the right prompt and iterations, you can produce a functional LVGL-based UI in minutes and then refine it to handle redraw bugs, missing legends, or other display quirks.

Testing

AI is excellent at generating automated tests and helping with test-driven development. Use it to create unit tests for pure logic and API use cases, generate integration tests that validate Notecard request formatting and template usage, and spot edge cases and propose scenarios your current tests don't cover.

Be careful: the assistant sometimes generates redundant or shallow tests! Keep a human-in-the-loop to ensure tests add real value.

Best Practices and Gotchas

- Start small: Avoid giving the assistant enormous amounts of context all at once. Small, focused iterations work better.

- Use an MCP: Ground the assistant with API validation and live docs to reduce hallucinations.

- Provide explicit libraries: Tell the assistant which driver or library to use when ambiguity would cause it to pick the wrong dependency.

- Keep connectivity code isolated: Put Notecard interactions in a separate module to reduce coupling.

- Feed logs and errors back: Pipe compiler output and serial logs into the assistant for faster fixes.

- Validate generated requests: Use MCP/api-validate before sending messages to real hardware or cloud endpoints.