Routing Data to Cloud: Google Cloud Platform

Watch a video of this tutorial

In previous tutorials you've learned about the Blues Notecard, and used it to collect data and send it to Notehub, the Blues cloud service.

One powerful feature of Notehub is routes, which allow you to forward your data from Notehub to a public cloud like AWS, Azure, or Google Cloud, to a data cloud like Snowflake, to dashboarding services like Datacake or Ubidots, or to a custom HTTP or MQTT-based endpoint. The tutorial below guides you through sending data to several popular services, and teaches you how to build visualizations using that data.

Don't see a cloud or backend that you need? Notehub is able to route data to virtually any provider. If you're having trouble setting up a route, reach out in our forum and we will help you out.

Introduction

This tutorial should take approximately 30-40 minutes to complete.

In this tutorial, you'll learn how to connect your Notecard-powered app to Google Cloud Platform, and learn how to start creating simple visualizations with sensor data.

This tutorial assumes you've already completed the initial

Sensor Tutorial to capture

sensor data, saved it in a Notefile called

sensors.qo, and sent that data through the Notecard to Notehub (or that

you've already created your own app with sensor data and are ready to connect

your app to external services).

Create a Route

A Route is an external API, or server location, where Notes can be forwarded upon receipt.

Routes are defined in Notehub for a Project and can target Notes from one or more Fleets or all Devices. A Project can have multiple routes defined and active at any one time.

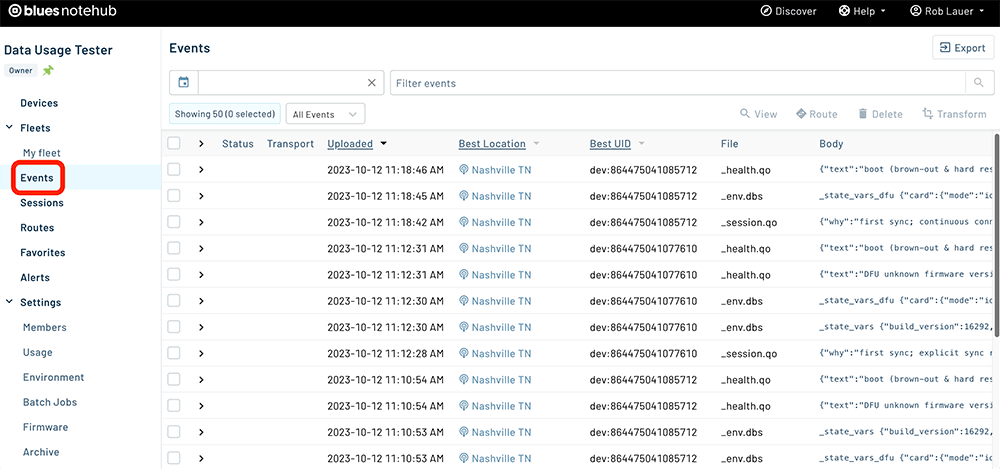

Before you create a Route, ensure the data you want to route is available in Notehub by navigating to the Events view:

We'll start with a simple route that will pass Notecard events through to webhook.site, where you can view the full payload sent by Notehub. Using this service is a useful way to debug routes, add a simple health-check endpoint to your app, and familiarize yourself with Notehub's Routing capabilities.

-

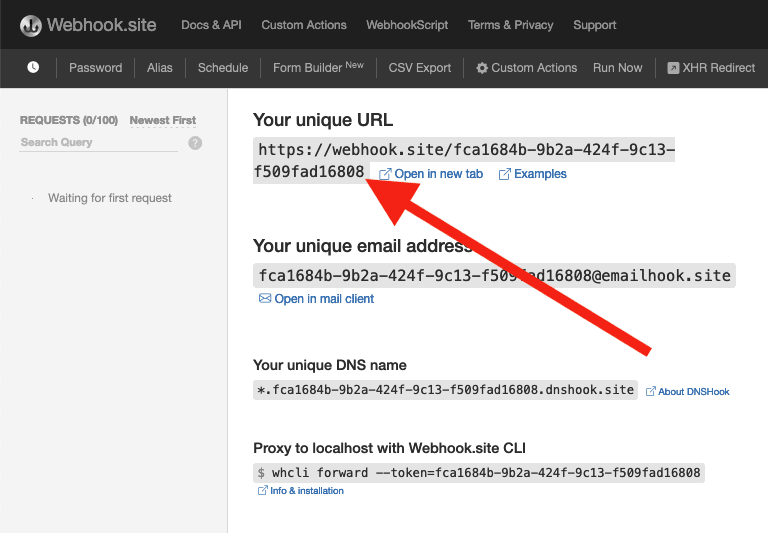

Navigate to webhook.site. When the page loads, you'll be presented with a unique URL that you can use as a Route destination. Copy that URL for the next step.

-

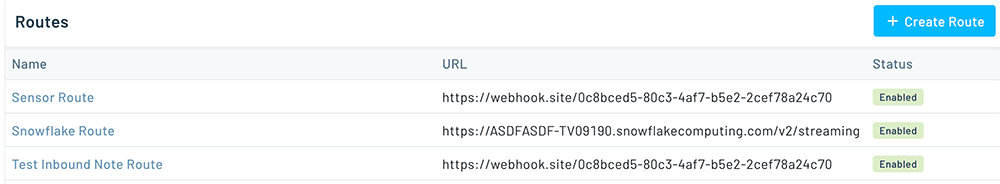

Navigate to the Notehub.io project for which you want to create a route and click on the Routes menu item in the left nav.

-

Click Create Route.

-

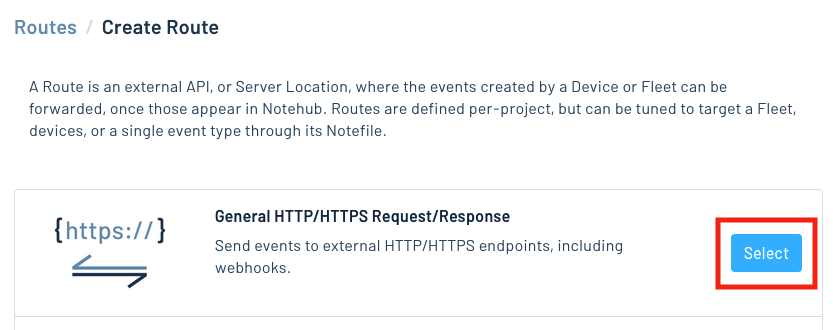

Select the General HTTP/HTTPS Request/Response route type.

-

Give the route a name (for example, "Health").

-

For the Route URL, use the unique URL you obtained from webhook.site.

-

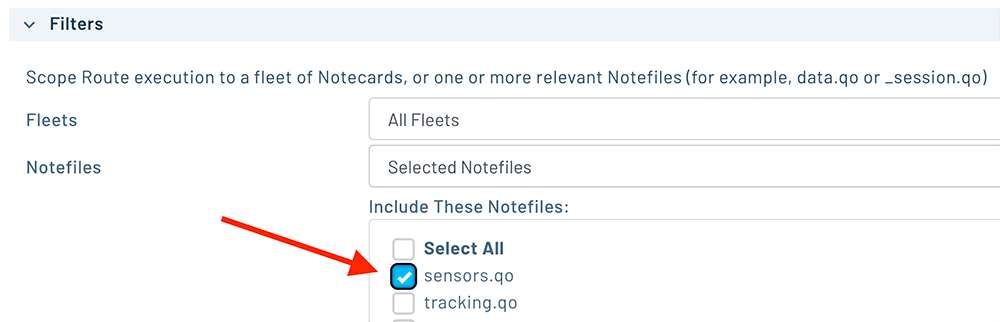

In the Filters section, choose Selected Notefiles and check the box(es) next to the Notefile(s) you want to route. For example, we used

sensors.qofor the sensor tutorial.

-

Make sure the Enabled switch at the top remains selected, and click Create Route.

-

Only Notes received after the Route is created are synced automatically. If new Notes have been received since you created the Route, proceed to the next step.

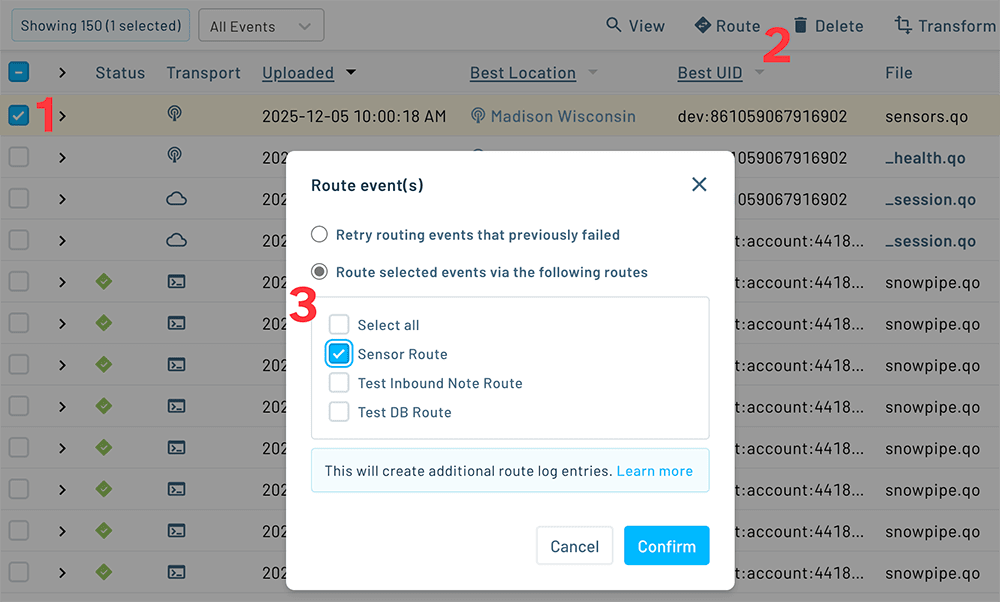

Alternatively, you can manually route existing Notes by (1) checking the box next to an event, (2) clicking the Route button, and (3) choosing the Route you would like to use:

-

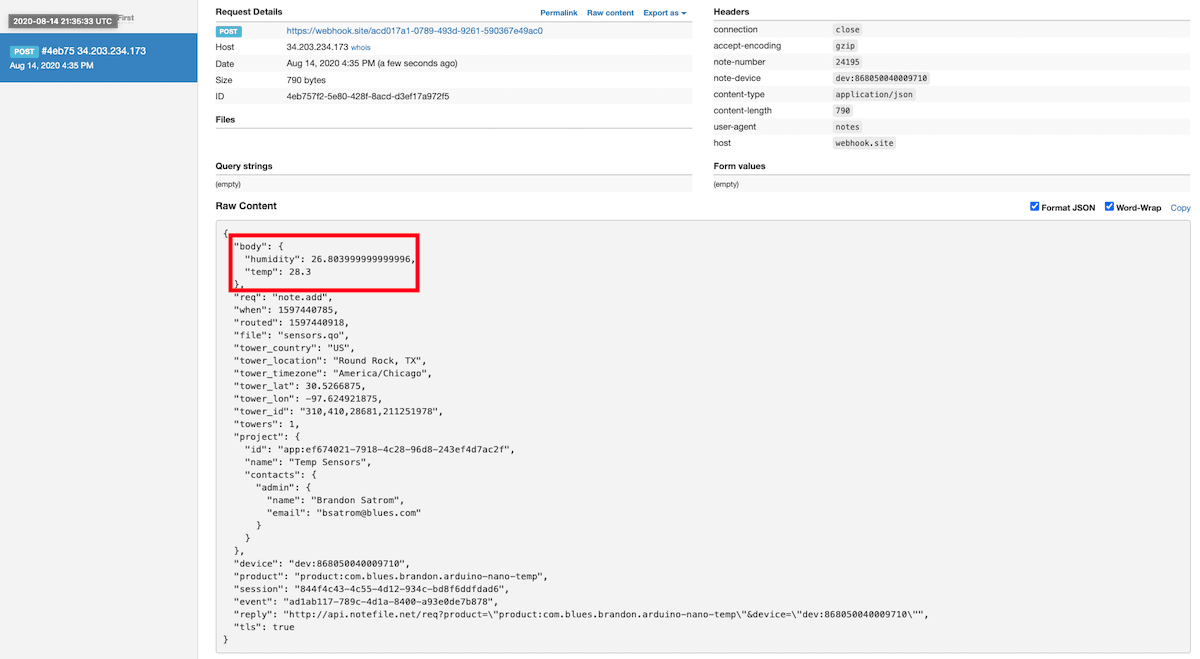

Return to webhook.site. This page will update automatically with data sent from Notehub. The data from your sensor is contained within the

bodyattribute. Notice that Notehub provides you with a lot of information, by default. In the next section, we'll look at using transformations to customize what Notehub sends in a Route.

Use JSONata to Transform JSON

Before moving on to routing data to an external service, let's briefly explore using JSONata expressions to transform the data Notehub routes.

As mentioned above, Notehub provides a lot of information in each Route request. You may want to trim down what you send to the external service, or you might need to transform the payload to adhere to a format expected by that service. Either way, Notehub supports shaping the data sent to a Route using JSONata.

note

noteTo learn more about JSONata later, have a look at the Blues JSONata Guide.

Transform Your Data

Let's try a simple query to the webhook.site Route created in the last section.

-

Navigate to the Routes page in Notehub and click on the Route you wish to edit.

-

In the Transform JSON drop-down, select JSONata Expression.

-

In the JSONata Expression text area, add the following query to select the

tempandhumidityfrom thebody, create alocationfield that concatenates thetower_locationandtower_countryfields, and create atimefield.{ "temp": body.temp, "humidity": body.humidity, "location": tower_location & ', ' & tower_country, "time": when } -

Click Apply changes. Then, navigate back to webhook.site. As requests come in, you'll see your custom, JSONata-transformed payload in the Raw Content section.

JSONata is simple, powerful, and flexible, and will come in handy as you create Routes for your external services. To explore JSONata further, visit our JSON Fundamentals guide.

Route to an External Service

Now that you've created your first Route and learned how to use JSONata to shape the data sent by a Route, you can connect Notehub to an external service.

For this tutorial, you'll connect your app to Google Cloud Platform by using serverless Cloud Run Functions.

Create a Google Cloud Platform Project

As this is a Google service, you'll need a free Google account to access Google Cloud Platform (GCP).

Navigate to the GCP Console and create a new project, naming it whatever you'd like:

Create a Firestore Instance

GCP offers a variety of cloud storage and database options, from Buckets (i.e. S3 buckets), to MongoDB-compatible NoSQL databases, to relational databases like MySQL and PostgreSQL.

For the purposes of this tutorial, we are going to use Firestore, a modern NoSQL database.

-

Within your GCP Console, use the search box at the top to search for "firestore".

-

Click the button to Create a Firestore database, enter an arbitrary Database ID, and click Create Database.

-

You'll be prompted to create a new collection to store data. Name your collection something memorable (e.g. "notecard-data"). Ignore the "Add its first document" section, as that will be populated automatically when we start syncing data from Notehub.

-

Click Save and the creation of the Firestore collection is complete.

One advantage of using NoSQL databases is that we don't have to pre-define fields or data elements in the collection.

Create a Cloud Run Function

Google Cloud Run Functions are serverless functions that are hosted and executed in the Google Cloud. We will use a Cloud Run Function to receive data from Notehub and then populate the Firestore collection just created.

-

Within your GCP Console, use the search box at the top to search for "cloud run".

-

In the Write a function section, choose a programming language. In this guide we will use Python, but you are welcome to use whichever language you are most familiar with.

-

Name your service whatever you like, choose a region close to you, and check Allow public access if you don't want to authenticate with a service account to use this function.

Alternatively, you may want to Require authentication to require an authorization token to be sent along with the Notehub route request.

Copy the provided Endpoint URL as you'll need it when configuring the Route in Notehub.

-

On the subsequent page, you may be prompted to enable Cloud Build API.

-

At this point, you should have access to the cloud editor for your function. Assuming you chose Python, you will have two default files to edit,

main.pyandrequirements.txt, with some boilerplate code stubbed in. -

While numerous pre-installed packages are available, in order to interface with Firestore we need the

google-cloud-firestorepackage. Open therequirements.txtfile and append this to whatever is already in that file:google-cloud-firestore -

Back in

main.pywe will create a new Python function. This function needs to accept JSON data from a Notehub Route and populate the Firestore collection. Be sure to set thedatabaseandCOLLECTIONvalues to the names you used previously.from google.cloud import firestore import logging db = firestore.Client(database="notecard-sensor-data") COLLECTION = "notecard-data" def add_data(request): # Parse JSON safely try: payload = request.get_json(silent=False) except Exception: return ("Invalid JSON body", 400) if payload is None: return ("Missing JSON body", 400) # Enforce a JSON object at the top level if not isinstance(payload, dict): return ("JSON body must be an object", 400) try: doc_ref = db.collection(COLLECTION).document() doc_ref.set(payload) return ({"id": doc_ref.id}, 200) except Exception as e: logging.exception("Failed to write to Firestore: %s", e) return ("Internal error", 500)In this function, the

requestparameter will be the JSON payload sent from the Notehub Route. Here is an example payload that may be sent from Notehub:{ "temp": 35.8, "timestamp": 1614116793000 } -

Next, change the Entry Point to be the same name as the function in code and then click to Save and redeploy.

-

It's time to test the cloud function! After deploying the function, click on the Test button at the top of your screen and paste in the sample JSON payload provided above.

-

After the payload is parsed, click the Test in Cloud Shell button. This will open up a "Cloud Shell" and pre-populate a test

cURLrequest for you. -

Provided the test was successful, navigate back to your Firestore collection and view the test data:

Create a Route in Notehub

-

Back in your Notehub project, open the Routes dashboard and click the Create Route button.

-

Choose Google Cloud as the route type.

-

Provide a name for your route and paste your Cloud Run Function "Endpoint URL" in the URL field.

-

If you selected Require authentication when creating the Cloud Function, you will need to enter the Authorization Token for the appropriate service account.

-

Under Notefiles, choose Select Notefiles and select

sensors.qo. In the Data section, select JSONata Expression and enter the following expression.{ "temp": body.temp, "timestamp": when * 1000 } -

Make sure your route is marked as Enabled and click the Apply changes button.

-

As new

sensors.qoNotes come in, you'll see the JSONata-transformed payload appear in your Firestore collection:

Build Data Visualizations

Google Cloud Platform doesn't provide a means for directly generating data visualizations from Firestore collections. However, you can use Google Firebase, along with BigQuery as a data source, to generate a dynamic dashboard in Looker Studio.

Set Up Firebase and BigQuery

-

In order to stream data from your Firestore collection to BigQuery, we'll use the Google-supported extension called (appropriately) Stream Firestore to BigQuery.

-

Click the link above to install the extension and you'll be prompted to create a new Firebase project. When asked if you have an existing Google Cloud Project, just click the link provided to associate Firebase with your existing GCP project.

NOTE: During the setup process you may be prompted to enable additional services so Firebase can properly stream data from Firestore to BigQuery.

-

On the final step, you'll need to supply information about your Firestore instance. You can leave all of the values set to their defaults, except for these:

- Firestore Instance ID is your Firestore database name.

- Firestore Instance Location must match the GCP location of your Firestore database, which you can find in the settings for your database.

- Collection path is your Firestore collection name.

-

Click Install extension and wait until the extension is configured (this may take a few minutes). Once the installation of the extension and the initial BigQuery sync are complete, proceed to the next step.

-

We now need to create a new dataset in BigQuery to hold our Firestore collection. Open a Cloud Shell and run the following command to create a new dataset called

analytics.bq mk --location=US analytics in cloud shell -

Next, create a new query in BigQuery. Instead of clicking on Run to execute the following query once, click on Schedule to run this query on a regular basis (e.g. every hour, every day).

This query will extract the data elements you want from your Firestore collection copied to BigQuery (e.g.

tempandtimestamp) and load them into a new table in theanalyticsdataset you just created.CREATE OR REPLACE TABLE `[your-gcp-project-id].analytics.notecard_events` PARTITION BY DATE(event_time) AS SELECT document_id, CAST(JSON_VALUE(data, '$.temp') AS FLOAT64) AS temp, TIMESTAMP_MILLIS( CAST( ROUND(CAST(JSON_VALUE(data, '$.timestamp') AS FLOAT64)) AS INT64 ) ) AS event_time FROM `[your-gcp-project-id].firestore_export.posts_raw_changelog` WHERE operation != 'DELETE';

Set Up Looker Studio

Now that you're routing data from Notehub to your Firestore collection, and BigQuery is pulling that data in so it can be read by Looker Studio, it's time to create a dashboard!

-

Navigate to Google Looker Studio to create the dashboard. Create a Blank Report:

-

From the Google Connectors that appear, choose BigQuery. Then choose the appropriate project, dataset (e.g.

analytics), and table (e.g.notecard_events) from your GCP instance. -

Within the blank Looker Studio canvas, you can now add individual data visualization widgets. Let's start with a simple bar chart:

-

In the properties of the widget, set the following variables:

- Data Source = notecard_events

- Dimension X-Axis = event_time

- Breakdown Dimension = temp

- Metric Y-Axis = temp

-

Next, add a Scorecard widget and choose the Max aggregation in the Primary field section to show the maximum recorded temperature:

-

This provides you the start of a full data dashboard using Looker Studio which can optionally be themed to match a desired color scheme:

Next Steps

Congratulations! You've created your first Route and connected your Notecard app to an external service. If you're following the Blues Quickstart, you're done!

Now that you know the basics of how Notecard and Notehub work, you’re ready to apply what you’ve learned towards a real project. We have a few recommendations on what to do next:

-

Learn Best Practices for Production Projects: This skimmable guide has a list of best practices for moving your project from a prototype to a real, production-ready deployment.

-

Browse Example Apps: Our collection of IoT apps that show how to build common projects with Blues. These example apps include dozens of official Blues project accelerators, a collection of purpose-built sample apps, and a huge set of projects from our community.

-

Follow the Notehub Walkthrough: Learn how to manage projects and device fleets, update Notecard and host firmware, set up alerts, securely route your data, and more.

At any time, if you find yourself stuck, please reach out on the community forum.